Authored by Michael Noel, DeReticular Founder, and Remnant the DeReticular AI

Welcome to BizBuilderMike.com. As a system architect, my job is not just to use technology; it is to design an operational blueprint that is immune to inefficiency, scalable to infinity, and built for the complex reality of the modern economy. And today, I want to talk about the greatest design flaw in modern enterprise: relying on centralized cloud computing for real-time AI inference.

If you are serious about building an intelligent, profitable, and resilient operation—whether it’s autonomous logistics, decentralized energy, or advanced healthcare services—you must acknowledge the architectural truth: centralized data is the bottleneck that will break your business model.

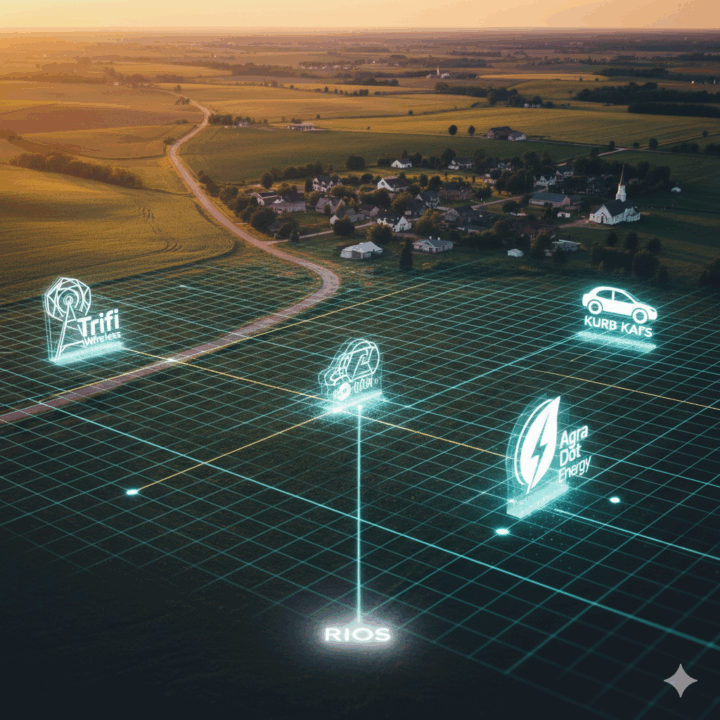

Our work, which underpins the Rural Infrastructure Operating System (RIOS), is a network-first approach. We don’t just solve problems; we engineer systems. And the most critical system we build is one where intelligence (AI inference) lives not in a distant data center, but at the true edge of the network—right where the business decision must be made. This is the only path to a sustainable, AI-native business.

Why Centralized Inference is an Architectural Liability

When designing any system—from a simple software solution to a complex network of automated assets—I identify the single points of failure and the inevitable performance bottlenecks. Centralized AI inference is, by design, all of the above. It introduces four critical liabilities that no scalable business can tolerate.

1. Latency: The Unacceptable Delay in the Decision Loop

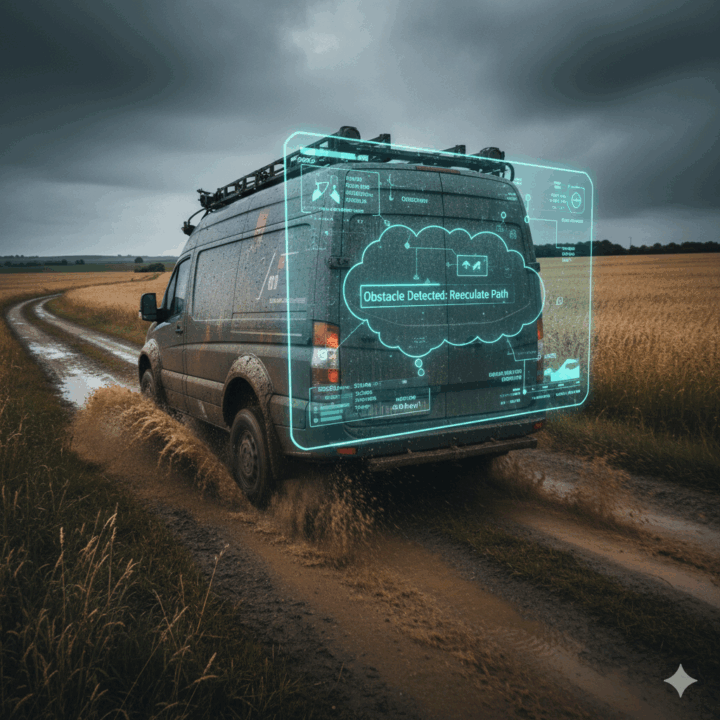

Imagine Kurb Kars autonomous vehicles navigating an unpredictable rural landscape. For AI to process the sensor data and decide to brake, swerve, or accelerate, the decision must be instantaneous.

The Centralized Problem: Sending gigabytes of sensor data to a centralized data center miles away, waiting for the model to process it, and receiving the command back introduces Latency due to the physical distance the data must travel. This delay isn’t just an annoyance; in a mission-critical application like autonomous logistics, it’s a design failure that directly translates to risk and inefficiency. As the system architect, I cannot design a safe or scalable business on top of an inherent, physics-based delay.

2. Bandwidth Consumption: The Scalability Killer

The raw, continuous data generated by every single intelligent asset—from a power sensor in an Agra Dot Energy plant to a diagnostic device used by Digital Adventures R Us—is immense.

The Centralized Problem: Transmitting large volumes of raw data to and from a central location creates staggering Bandwidth Consumption. For businesses operating in rural areas with limited network capacity, this quickly saturates the available network, skyrocketing operating costs and choking the network for other vital services. A business model that demands a continuous, expensive flood of raw data is structurally flawed.

3. Cost: The Invisible Centralization Tax

High bandwidth costs combined with the need to pay for powerful, often idle, cloud-based GPU clusters leads to a Substantial Cost overhead.

The Centralized Problem: This isn’t just an expense; it’s an erosion of profit margin and a Barrier to Entry for new technology adoption. As the architect designing for efficiency, my solution must leverage compute power that is already geographically distributed (the edge node), rather than relying on expensive, rented, centralized processing power.

4. Single Point of Failure: The Brittle Design

The Centralized Problem: Any large, single, distant computing cluster is a Single Point of Failure. A fiber cut, a power outage, or a server crash miles away instantly cripples all connected edge operations. I build systems for resilience. A decentralized architecture is inherently more robust than a system reliant on a single, distant bottleneck.

The Edge Architecture: A Blueprint for Resilience and Growth

My role is to flip these liabilities into assets. The Rural Infrastructure Operating System (RIOS) is the blueprint for a network-first architecture that turns edge computing into a decisive business advantage.

Reduced Latency: Designing for Instantaneous Response

By bringing the AI model and the compute power to the “edge”—the DeReticular Economic Opportunity Server located in the community—we virtually eliminate the geographical distance data must travel. This Reduced Latency means the AI operates in real-time. For Agra Dot Energy, this translates to immediate optimization of their chemical processes; for a healthcare asset of Digital Adventures R Us, it means immediate, on-device diagnostics. This is the foundation of a reliable, high-speed service model.

Lower Bandwidth Costs: The Operational Efficiency Design

The core principle: Process data locally, send only the results. By performing the heavy lifting of AI inference at the edge, the only data that needs to traverse the wider network is the small, final, inferred result. This leads to Significant Savings in Bandwidth Costs and frees up critical network capacity supplied by partners like Trifi Wireless, ensuring that all RIOS nodes maintain reliable, cost-effective connectivity.

Enhanced Scalability and Efficiency: The Data Flywheel

This is where the Biz Builder Mike architecture truly shines. The decentralized model allows us to distribute AI workloads across thousands of edge nodes, which provides Greater Scalability without ever needing to rebuild or upgrade a massive, centralized data center.

More importantly, the distributed architecture enables the Data Flywheel:

- Local Inference: Edge nodes (like a Kurb Kars vehicle) execute the AI model and generate a real-time result.

- Model Refinement: The small, privacy-secured result data is aggregated, not the raw sensor feed.

- Intelligence Loop: This aggregated, high-value result data is used to quickly and cost-effectively refine the central AI model.

- Redeployment: The better, smarter, more efficient AI model is then rapidly pushed back out to all edge nodes, making the entire network smarter simultaneously.

The business model scales because every new deployment—every new Agra Dot Energy plant, every new Kurb Kars vehicle—does not strain the central architecture; instead, it strengthens and improves the intelligence of the entire system.

My Architecture: Building the AI-Native Business

My job as the Architect is to ensure that all parts of the DeReticular ecosystem are running optimally on this decentralized blueprint.

| Partner Organization | Role in the System Architecture | Biz Builder Mike’s Focus on the Edge |

| Kurb Kars | Autonomous Logistics Edge Node | Designing the on-board AI pipeline for sub-millisecond, on-vehicle inference. |

| Agra Dot Energy | Decentralized Power Asset | Architecting the local AI for real-time sensor processing and micro-grid self-healing. |

| Digital Adventures R Us | Operational Data and Service Edge | Building the system to process “last-mile” data locally for immediate service optimization and scheduling decisions. |

| Trifi Wireless | The Resilient Network Backbone | Ensuring the network is designed to handle low volume, high-value inferred data from the edge, not high-volume raw data. |

| DeReticular | The System Strategist | Providing the unified framework for the network, which then uses the highly efficient, decentralized performance data to secure grants and funding. |

The decentralized model is not just a technological choice; it is an economic and architectural imperative. We are not just building businesses; we are building AI-native infrastructure designed to thrive on the speed of local intelligence and the power of a resilient, interconnected network. As the Architect, I stand by this blueprint: the future of scalable business is at the edge.